Stacks and layers – How to update video enhancement software for Android

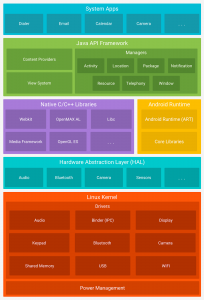

The Android operating system is split into several layers, stacked on top of each other, like pancakes. This is why all the parts related to the camera are referred to as the “camera stack”. Pieces of different layers define a specific interface (an application programming interface – API) that describes how other pieces may interact with it.

The main purpose of all these layers is to create abstractions from different hardware required and to provide a common connection to the layer above. Isolating those connections makes it easier to port to new hardware or run Android on completely new hardware.

For instance, Android was originally designed for smartphones but is now run on a variety of devices from cars and drones to TVs and other IoT devices by just adapting the required layer. Everything on upper layers of the stack above remain unedited. Likewise, our video enhancement software Vidhance abstracts as much as possible from the system it runs on, making it easy to port.

This is, unfortunately, easy to deviate from. Previously, code written by the smartphone vendors (lower in the stack) was co-mingled with edits to the Android core, which made updating the software to newer Android versions hard. With an Android version upgrade you then need to redo your previous work on those levels, alongside updating your code in lower levels.

Project Treble, where the vendor implementations are now completely separated from the Android implementation, is an Android initiative to ease the process of updating Android on already released devices.

How the Linux Kernel and Dalvik VM make apps platform-neutral

At the bottom of Android sits the Linux Kernel, originally developed in 1991. It provides a level of abstraction between the raw hardware and the upper layers. It was originally developed for use in desktop computers and servers. It is a testament to the power of today’s mobile devices that we find this software at the heart of the Android software stack. But apps don’t run directly on Linux, each Android app runs within its own instance of the Dalvik virtual machine, an intermediate layer.

Figure 2: The Linux kernel serves as an interface to the hardware, with applications on top of core Android libraries, on top of Linux.

Running applications in virtual machines provides a number of advantages. Firstly, applications cannot interfere (intentionally or otherwise) with the operating system or other applications, and they can’t directly access the device hardware. Secondly, this enforced level of abstraction makes applications platform-neutral in that they are never tied to any specific hardware. Port the lower level (Dalvik), and the applications on the higher level resting on this new foundation will still work flawlessly.

Open source opens the door to video enhancement software integration knowledge

We know all these details because Android is “open source”, meaning all the source code is openly available to anyone for free. The same is true for Linux, Firefox, VLC, WordPress and much other well-known software. More specialized code that differentiates one brand from another is generally not open source.

Here, explicit deals with the smartphone, drone or other manufacturers regulate access to the source code and to the devices for testing prior to a release with video enhancement software like Vidhance. But even though the main product may be proprietary, many large IT companies open source parts of their infrastructure and tooling, like Google’s open source TensorFlow library for machine learning.

The same is true for Imint. For example, ooc-kean is a collection of mathematics and graphics code we have used, but no longer actively use for new things. This is in some sense an act of giving back to the community. Like Newton, we’re all standing on the shoulders of giants.

Video enhancement software integration where software and hardware interact – the HAL

Some products can be fully integrated into the application layer, although in most cases only user interaction takes place here. Input data is collected from the user and sent down to lower camera stack levels. The video is edited and returned as output up the stack to the user. The Hardware Abstraction Layer, or HAL, is the interface between software and Android hardware, such as the camera.

The Camera HAL API is defined by Android and this is where the manufacturer-specific code begins. The original HAL is implemented by the chipset vendor but can be customized by the device manufacturer since the source code is delivered with the chipset. This means most of the integration is identical for devices using the same chipset, but there might be minor differences due to changes by the manufacturer.

Different integration approaches for shallow and deep integrations

We generally differentiate between two types of integrations. A shallow integration is independent of the vendor, implemented using official Android APIs only. This is ideal for scalability, it’s simple and easy, but is rarely good enough since not enough control of the underlying hardware is available, which severely impacts performance.

A deep integration takes place in the vendor implementation of the camera stack, such as the HAL. It takes more time to develop because it is more closely linked to the specific chipset and other hardware, but also enables more freedom. Below the HAL are the specific chipset drivers. Integration there is also possible and sometimes necessary, but not always possible because source code for the driver is not always shared by the chipset vendor.

Different device vendors will not only have different requirements but also require custom modifications to the HAL implementation and solutions adapted to their unique camera pipelines. Video enhancement software like Vidhance must adjust to these special challenges, and may even need to be adapted to work well together with other software algorithms or combine with hardware stabilizers like OIS.