How video compression works

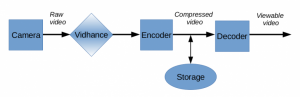

Video is compressed using a video encoder for a specific video format, and its corresponding decoder is used to create human-viewable video for playback. Both steps must be performed effectively and quickly to ensure live recording and live playback.

Figure 1: The camera delivers raw video frames via Vidhance to an encoder, which compresses the video before storing it. The compressed video must then be decoded before it can be viewed.

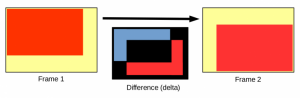

The individual images can be compressed by storing them in formats like JPEG and PNG. This is a simple approach, but it is not preferable. Video compression usually works by computing and compressing only the differences between frames, called the delta. This is preferable to storing each individual frame in its entirety: If a frame is similar to the previous one, as is often the case in videos, describing how it changed involves less data than describing the full frame.

Figure 2: The red box moves from one corner to another. If we express this difference as the change in each pixel, we end up with a big delta (making all the top-left pixels yellow, and making all the bottom-right pixels red). Good compression needs something better.

Given that a digital image consists of pixels, the delta is an accumulation of differences in corresponding pixels. The delta between two pixels can be computed using the difference between the colors of those pixels. A frame delta consists of all its pixel deltas.

We can visualize this delta by drawing it as its own image. If a pixel has the exact same color in both images, that pixel will be black, since the delta is 0 and black color is represented as (0.0.0) in the RGB color space. The greater the difference between corresponding pixels, the brighter their delta pixel will be. The greater the difference between the two frames, the more color and details will be seen in the delta.

Why video stabilization improves video compression

Figure 3: Center: One frame of a shaky video. Left: Delta (changes of each pixel) from the previous frame without stabilizer. Right: Delta with stabilizer, much smaller and easier to compress.

The image above shows one frame of an example video in the center. To the left is the delta relating to the next frame without any stabilization, and to the right with Vidhance video stabilization. Not a lot is happening in the video, so the delta should be mostly black. But note how the outlines of the girl’s hair can be seen as purple lines. This is because adding purple (255.0.255) to what was previously green (0.255.0) results in new white (255.255.255) pixels.

As the video was a bit shaky, there are unwanted camera movements resulting in an unnecessarily bright delta, which makes video compression harder and the video file larger. Compare this with the steadier video on the right, which does not require as much data, making the file smaller.

A video’s bitrate determines how much data describes each frame and it may be either constant or variable. A higher value enables better quality but also means the file will be larger. When encoding video at a constant bitrate, the quality is limited by the amount of data allowed for each frame, so quality may be sacrificed to keep each delta size below the limit.

With a variable bitrate, a quality setting determines how much (or little) detail may be sacrificed on each frame and the file size is secondary. There is also lossless video compression – smaller file size without any loss of quality – but it only goes so far compared to the far more common lossy versions.